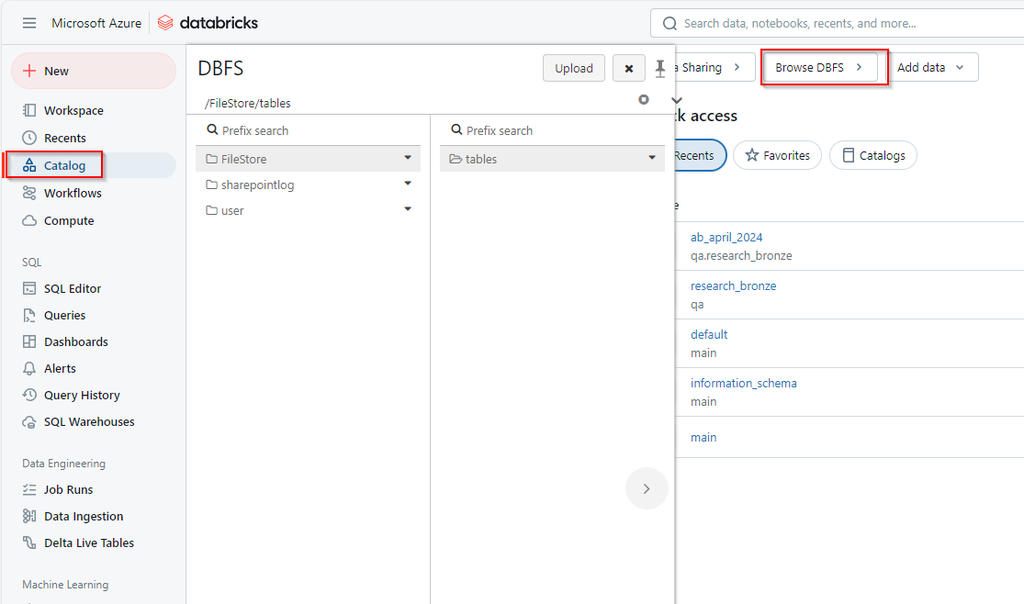

Databricks File System (DBFS) is a distributed file system mounted into a Databricks workspace and available on Databricks clusters. DBFS is an abstraction on top of scalable object storage.

Databricks recommends that you store data in mounted object storage rather than in the DBFS root. The DBFS root is not intended for production customer data.

DBFS root is the default file system location provisioned for a Databricks workspace when the workspace is created. It resides in the cloud storage account associated with the Databricks workspace

Databricks dbutils

**dbutils** is a set of utility functions provided by Databricks to help manage and interact with various resources in a Databricks environment, such as files, jobs, widgets, secrets, and notebooks. It is commonly used in Databricks notebooks to perform tasks like handling file systems, retrieving secrets, running notebooks, and controlling job execution.

Dbutils.help()

- credentials: DatabricksCredentialUtils -> Utilities for interacting with credentials within notebooks

- data: DataUtils -> Utilities for understanding and interacting with datasets (EXPERIMENTAL)

- fs: DbfsUtils -> Manipulates the Databricks filesystem (DBFS) from the console

- jobs: JobsUtils -> Utilities for leveraging jobs features

- library: LibraryUtils -> Utilities for session isolated libraries

- meta: MetaUtils -> Methods to hook into the compiler (EXPERIMENTAL)

- notebook: NotebookUtils -> Utilities for the control flow of a notebook (EXPERIMENTAL)

- preview: Preview -> Utilities under preview category

- secrets: SecretUtils -> Provides utilities for leveraging secrets within notebooks

- widgets: WidgetsUtils -> Methods to create and get bound value of input widgets inside notebooks

1. dbutils.fs (File System Utilities)

dbutils.fs.help()

dbutils.fs provides utilities to interact with various file systems, like DBFS (Databricks File System), Azure Blob Storage, and others, similarly to how you would interact with a local file system.

List Files:

dbutils.fs.ls(“/mnt/”)

Mount Azure Blob Storage:

dbutils.fs.mount(

source = "wasbs://<container>@<storage-account>.blob.core.windows.net",

mount_point = "/mnt/myblobstorage",

extra_configs = {"<key>": "<value>"}

)

For Azure Blob: wasbs://

For Azure Data Lake Gen2: abfss://

For S3: s3a://Unmount

dbutils.fs.unmount("/mnt/myblobstorage")Copy Files:

dbutils.fs.cp("/mnt/source_file.txt", "/mnt/destination_file.txt")Remove Files:

dbutils.fs.rm("/mnt/myfolder/", True) # True to remove recursivelyMove Files:

dbutils.fs.mv("/mnt/source_file.txt", "/mnt/destination_file.txt")dbutils.secrets (Secret Management)

dbutils.secrets is used to retrieve secrets stored in Databricks Secret Scopes. This is essential for securely managing sensitive data like passwords, API keys, or tokens.

dbutils.secrets.help()

Get a Secret:

my_secret = dbutils.secrets.get(scope="my-secret-scope", key="my-secret-key")List Secrets:

dbutils.secrets.list(scope="my-secret-scope")List Secret Scopes:

dbutils.secrets.listScopes()dbutils.widgets (Parameter Widgets)

dbutils.notebook provides functionality to run one notebook from another and pass data between notebooks. It’s useful when you want to build modular pipelines by chaining multiple notebooks.

dbutils.widgets.help()

Run Another Notebook:

dbutils.notebook.run("/path/to/other_notebook", 60, {"param1": "value1", "param2": "value2"})Runs another notebook with specified timeout (in seconds) and parameters. You can pass parameters as a dictionary.

Exit a Notebook:

dbutils.notebook.exit("Success")Exits the notebook with a status message or value.

Return Value from a Notebook:

result = dbutils.notebook.run("/path/to/notebook", 60, {"param": "value"})

print(result)dbutils.jobs (Job Utilities)

dbutils.jobs helps with tasks related to job execution within Databricks, such as getting details about the current job or task.

dbutils.jobs.help()

Get Job Run Information

job_info = dbutils.jobs.taskValues.get(job_id="<job_id>", task_key="<task_key>")dbutils.library

Manages libraries within Databricks, like installing and updating them (for clusters).

dbutils.library.installPyPI("numpy")Example

# Mount Azure Blob Storage using dbutils.fs

dbutils.fs.mount(

source = "wasbs://mycontainer@myaccount.blob.core.windows.net",

mount_point = "/mnt/mydata",

extra_configs = {"fs.azure.account.key.myaccount.blob.core.windows.net": "<storage-key>"}

)

# List contents of the mount

display(dbutils.fs.ls("/mnt/mydata"))

# Get a secret from a secret scope

db_password = dbutils.secrets.get(scope="my-secret-scope", key="db-password")

# Create a dropdown widget to choose a dataset

dbutils.widgets.dropdown("dataset", "dataset1", ["dataset1", "dataset2", "dataset3"], "Choose Dataset")

# Get the selected dataset value

selected_dataset = dbutils.widgets.get("dataset")

print(f"Selected dataset: {selected_dataset}")

# Remove all widgets after use

dbutils.widgets.removeAll()

# Run another notebook and pass parameters

result = dbutils.notebook.run("/path/to/notebook", 60, {"input_param": "value"})

print(result)

Magic Command

list

| Aspect | %fs (Magic Command) | %sh (Magic Command) | dbutils.fs (Databricks Utilities) | os.<> (Python OS Module) |

| Example Usage | %fs ls /databricks-datasets | %sh ls /tmp | dbutils.fs.ls(“/databricks-datasets”) | import os os.listdir(“/tmp”) |

| Cloud Storage Mounts | Can access mounted cloud storage paths. | No, unless the cloud storage is accessible from the driver node. | Can mount and access external cloud storage (e.g., S3, Azure Blob) to DBFS. | No access to mounted DBFS or cloud storage. |

| Use Case | Lightweight access to DBFS for listing, copying, removing files. | Execute system-level commands from notebooks. | Programmatic, flexible access to DBFS and cloud storage. | Access files and environment variables on the local node. |

Please do not hesitate to contact me if you have any questions at William . chen @ mainri.ca

(remove all space from the email account 😊)