Schema Evolution in Databricks refers to the ability to automatically adapt and manage changes in the structure (schema) of a Delta Lake table over time. It allows users to modify the schema of an existing table (e.g., adding or updating columns) without the need for a complete rewrite of the data.

Key Features of Schema Evolution

- Automatic Adaptation: Delta Lake can automatically evolve the schema of a table when new columns are added to the incoming data, or when data types change, if certain configurations are enabled.

- Backward and Forward Compatibility: Delta Lake ensures that new data can be written to a table without breaking the existing schema. It also ensures that existing queries remain compatible, even if the schema changes.

Configuration for Schema Evolution

- mergeSchema

This option allows you to append new data with a schema that differs from the existing table schema. It merges the new schema into the table.

Usage: Typically used when you are appending data. - overwriteSchema

This option is used when you want to completely replace the schema of the table with the schema of the new data.

Usage: Typically used when you are overwriting data

mergSchema

When new data has additional columns that aren’t present in the target Delta table, Delta Lake can automatically merge the new schema into the existing table schema.

# Append new data to the Delta table with automatic schema merging

df_new_data.write.format("delta").mode("append").option("mergeSchema", "true").save("/path/to/delta-table")

overwriteSchema

If you want to replace the entire schema (including removing existing columns), you can use the overwriteSchema option.

# Overwrite the existing Delta table schema with new data

df_new_data.write.format("delta").mode("overwrite").option("overwriteSchema", "true").save("/path/to/delta-table")

Configure spark.databricks.delta.schema.autoMerge

You can configure this setting at the following levels:

- Session Level (applies to a specific session or job)

- Cluster Level (applies to all jobs on the cluster)

Session-Level Configuration (Spark session level)

Once this is enabled, all write and merge operations in the session will automatically allow schema evolution.

# Enable auto schema merging for the session

spark.conf.set("spark.databricks.delta.schema.autoMerge.enabled", "true")

Cluster-Level Configuration

This enables automatic schema merging for all operations on the cluster without needing to set it in each job.

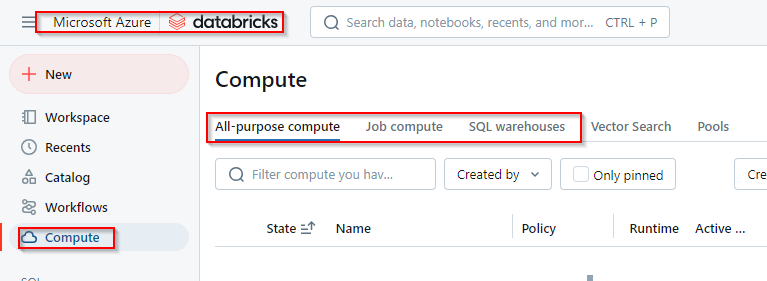

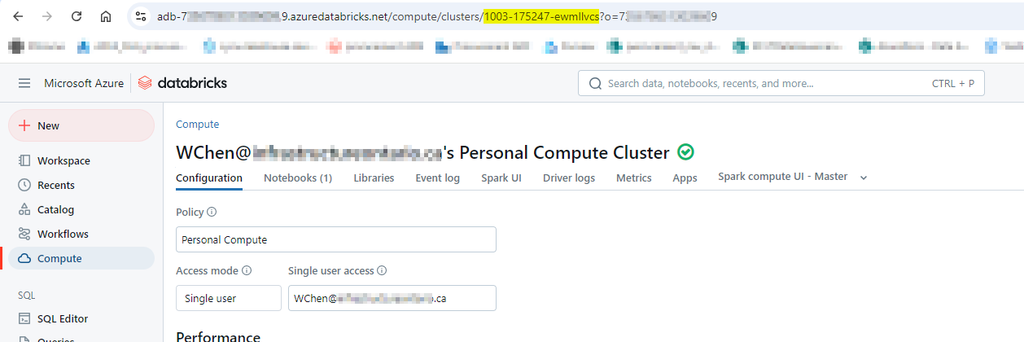

- Go to your Databricks Workspace.

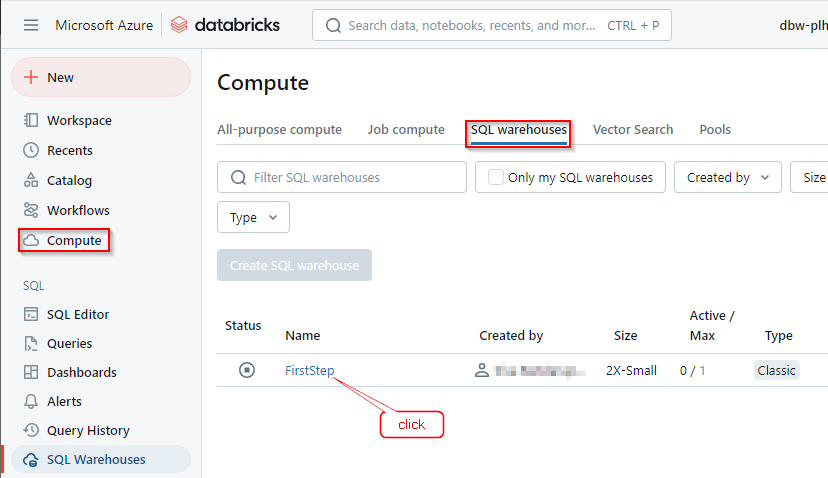

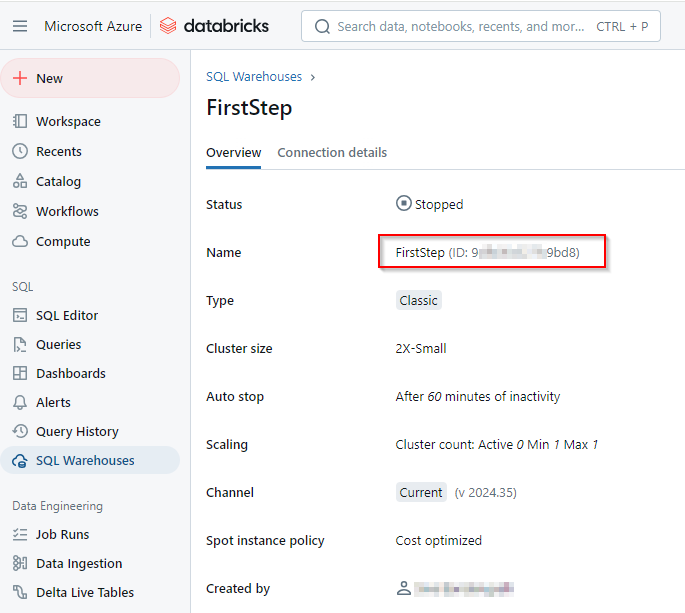

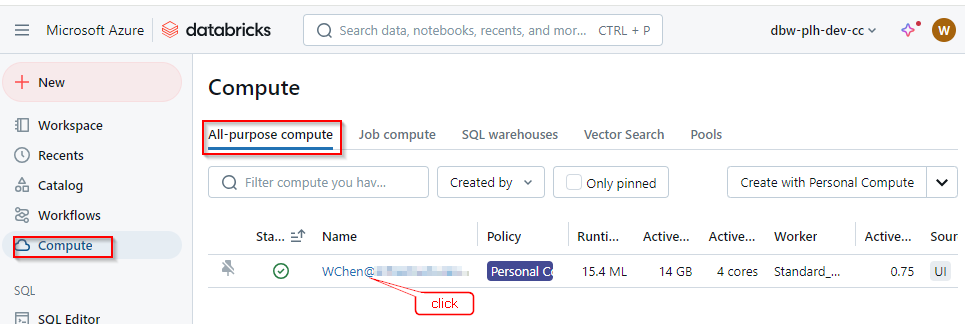

- Navigate to Clusters and select your cluster.

- Go to the Configuration tab.

- Under Spark Config, add the following configuration:

spark.databricks.delta.schema.autoMerge.enabled true

Please do not hesitate to contact me if you have any questions at William . chen @ mainri.ca

(remove all space from the email account 😊)